Item10635: Wrong international character display.

Priority: Urgent

Current State: Closed

Released In: 2.0.0

Target Release: major

Current State: Closed

Released In: 2.0.0

Target Release: major

$Foswiki::cfg{UserInterfaceInternationalisation} = 1;

$Foswiki::cfg{Languages}{cs}{Enabled} = 1; #all enabled

$Foswiki::cfg{UseLocale} = 1;

$Foswiki::cfg{Site}{Locale} = 'sk_SK.UTF-8';

$Foswiki::cfg{Site}{CharSet} = 'UTF-8';

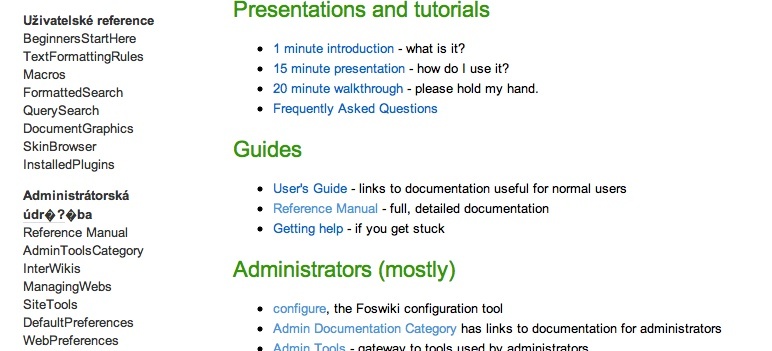

When displaying any page, the character 'ž' in the same menu WebLeftBarExample displayed differently.

#: PatternSkin/data/System/WebLeftBarExample.txt:16 msgid "User Reference" msgstr "Uživatelské reference" #"ž" displayed correctly in the browser in the #: PatternSkin/data/System/WebLeftBarExample.txt:26 msgid "Admin Maintenance" msgstr "Administrátorská údržba" # the "ž" is displayed wrong in the browserFoswiki RC1, you can reproduce it:

- configure for utf8 as above

- select interface language czech (what has a wrong translation: _jméno_jazyka)

- show any page a watch the sidebar (default pattern skin)

Administrátorská údržba - the same text displayed OKWhen disable UseLocale in cfg:

$Foswiki::cfg{UseLocale} = 0;

this bug disappear, so using real SiteLocale is not recommended.

The dirty hack, what solving the issue - in the first try is in Render.pm

$text = Encode::decode("utf-8", $text); #here

$text =~ s($STARTWW

(?:($Foswiki::regex{webNameRegex})\.)?

($Foswiki::regex{wikiWordRegex}|

$Foswiki::regex{abbrevRegex})

($Foswiki::regex{anchorRegex})?)

(_handleWikiWord( $this, $topicObject, $1, $2, $3))gexom;

$text = Encode::encode("utf-8", $text); #and here

later will try adding a diff file...

-- JozefMojzis - 14 Apr 2011

So, Encode::decode converts octets into an unicode-aware "character" string (with utf flag set), and encode does the opposite.

I am kind of disappointed that we treat $text as octets by default. We should really be treating $text as character data rather than octets for as much of our code as possible, right?

-- PaulHarvey - 19 May 2011

This explains why I've been having issues with MongoDB enabled. MongoDB returns character strings, with utf flag set. See Item10748 where we added a similar fix at a much later stage in the rendering pipeline.

-- PaulHarvey - 19 May 2011

Unfortunately, the "quick fix" above solving the main problem from this Task, but somewhere deeper cause double encoding or something like - and therefore it is not correct.

Encoding problems like this are the main reason, why is good encode/decode from/to utf8 as close to IO as possible... (e.g. for example in the Foswiki::readFile). IMHO, Foswiki should internally use only and only utf-8 characters and not octets/bytes.

One possible solution - not ideal, but usable: Foswiki::readFile (or the new Store::VC interface) should read all files from the filesystem in binary mode (as Store::VC it does). If the SIteCharset is not utf8, should convert (recode) the text files from the SiteCharset to utf8 and return utf8-flagged string to Foswiki. From this moment, everything is done in utf8 . At the write, from utf8 convert to SiteCharset and write binary. (here can be problem of lost characters,, e.g. when someone want convert greek omega into 8859-1, but possible workaround with html entities.). If the SiteCharset is UTF8 - no conversions are needed.

At the DB level alike. If the DB is not utf8 - convert before read/write. If the DB is utf8 (like Mongo) = no conversions. And this allow having multiple DB's with different encodings and so on.

This way will not break anything at Topic level (so old 88591 Topics remain untouched). Unfortunately, this will break much things internally. (Will need revisit every regex, especially like /\177/ or so.). Will probably break much of plugins. and so on. But doing it sooner is better than later. If here is no another reason: it is easier optimize a debugged code as debugging the (badly) optimized one. ($0.02) CGI.pm (version 3.43 shipped with Ubuntu 10.04 LTS and I think Debian Lenny is broken, see Support.Faq25) and FCGI.pm (If you're using ModFastCGIEngineContrib) really helps a lot.

-- PaulHarvey - 08 Nov 2011

checked in 1.1.5 - everything is OK, so "No action required" - screenshot:

- screenshot showing different behavior in verbatim block:

ItemTemplate edit

| Summary | Wrong international character display. |

| ReportedBy | JozefMojzis |

| Codebase | 1.1.3 beta1, trunk |

| SVN Range | |

| AppliesTo | Engine |

| Component | I18N, Unicode |

| Priority | Urgent |

| CurrentState | Closed |

| WaitingFor | |

| Checkins | |

| TargetRelease | major |

| ReleasedIn | 2.0.0 |

| CheckinsOnBranches | |

| trunkCheckins | |

| masterCheckins | |

| ItemBranchCheckins | |

| Release01x01Checkins |

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

TestTopic0.txt | manage | 222 bytes | 14 Apr 2011 - 15:31 | JozefMojzis | the topic source text |

| |

foswiki_error.jpg | manage | 100 K | 14 Apr 2011 - 15:06 | JozefMojzis | screenshot |

| |

foswiki_error2.jpg | manage | 39 K | 14 Apr 2011 - 15:30 | JozefMojzis | screenshot showing different behavior in verbatim block |

Edit | Attach | Print version | History: r16 < r15 < r14 < r13 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r16 - 05 Jul 2015, GeorgeClark

The copyright of the content on this website is held by the contributing authors, except where stated elsewhere. See Copyright Statement.  Legal Imprint Privacy Policy

Legal Imprint Privacy Policy

Legal Imprint Privacy Policy

Legal Imprint Privacy Policy